Helios recently embarked on a short two week long internal project designed to get us acquainted with creating multi-player virtual reality applications in Unreal Engine 4. The HTC Vive was our VR hardware of choice since it’s room scale ready and includes motion controllers. With hardware in mind we decided we would cap the experience at two players and each player would be placed into separate physical play spaces but would share the same virtual space. The goals were to promote interaction, competition, and experiencing a synchronized virtual world. The idea came about that both players would be competitively catching fireflies in the game world, and since we couldn’t guarantee that both players would be within earshot, we decided to create avatars with the ability to express themselves via emojis, colors and the light they emit, so some simple controls need to be added to the HTC Vive controller touch pads.

In order to accomplish any of this we had to look into Unreal’s already existing networking features, and we were not disappointed. Unreal has an outstanding assortment of single click networking features available. Including basic features on all Actors, for instance; “Replicates.” This causes server side actors spawning to have a duplicate spawned for all attached clients. In addition to “Replicate Movement”, which replicates the position and rotation of the actor from the server to the client. We quickly learned that Unreal has a whole suite of networking features that are extremely well documented and implemented, however they focus more on traditional games than VR.

What do we mean by that? Well traditional games operate on the idea that a character is at a single position and rotation at any given time. However, when working with VR suddenly you have to keep track of more than one position and rotation for each player. Now you have the head and both hands in addition to any items they are interacting with or holding, and your origin remains stationary which means all those nice actor implemented features are useless. You need to manage all the replication yourself.

This is important to note because you learn very quickly that replication only works from server to client(s). Client side actors must call a server RPC which updates variables or multicast from the server and then replicates to clients. This will be expanded in the coming project summary.

The First Step

The first step was to connect two machines:

- Blueprints were used for the entire project, aside from some in house VR modules that allow for added controller support. It helped us have a clear overview of the project, and it also gave us a visual representation of how the replication and networking worked.

- When creating a multiplayer game for Unreal it’s required that you use set an online subsystem. These subsystems are expected to be something like Steam, Playstation Network, or XBox Live. However if this is set to “null” it defaults to LAN. To set the Online Subsystem you must go into you must go into your Project.Build.cs and uncomment the commented out online subsystem section of the code. Next you must add “OnlineSubsystemNull” to the PublicDependencyModuleNames.

- We took full advantage of Epic’s Learning content and copied and pasted nearly all of their online blueprint code from the provided “Multiplayer Shootout” example. It’s a fantastic introduction to how Unreal handles hosting a game, joining a game, handling errors and other network issues. It also provides a good look at how Game Mode events like OnPostLogin work and what they can be used for.

Machines Connected

Once we had two machines connected we had to tackle the task of getting data between the two machines. The information below is best described as the “hacky way”, as it includes of a number of brute force implementations that put a heavy load on the LAN. It absolutely does not optimise anything at all. It also doesn’t take full advantage of Unreal’s networking features, such as, replicated variables. But it works, and it’s stable. For a complete understanding of all the replication methods read Unreal’s Replication Documentation.

The following pattern was used for everything that required replication. We will use the HMD’s position and orientation in this example as it is a key thing to replicate to other clients:

- Trust the clients. Since cheating isn’t a concern for this project we elected to trust everything the client tells the server. This also means no prediction is required.

- Separate Server / Client via event graphs. Create a new event graph called “Server” in all of your actors that require replication and separate your server side functions into this graph. This was mostly an organizational thing to help keep track of which functions were being run where.

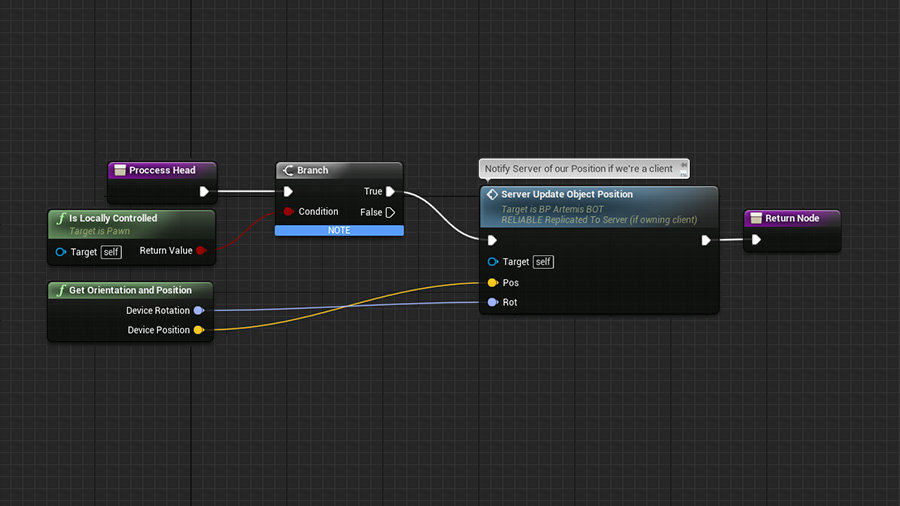

- Send all relevant data to server. Anything thing that needs to be replicated needs a custom event that replicates using “Run on Server”. Using this new event you can pass into it all the data you wish to have replicated across your clients. This case, position and rotation of the HMD.

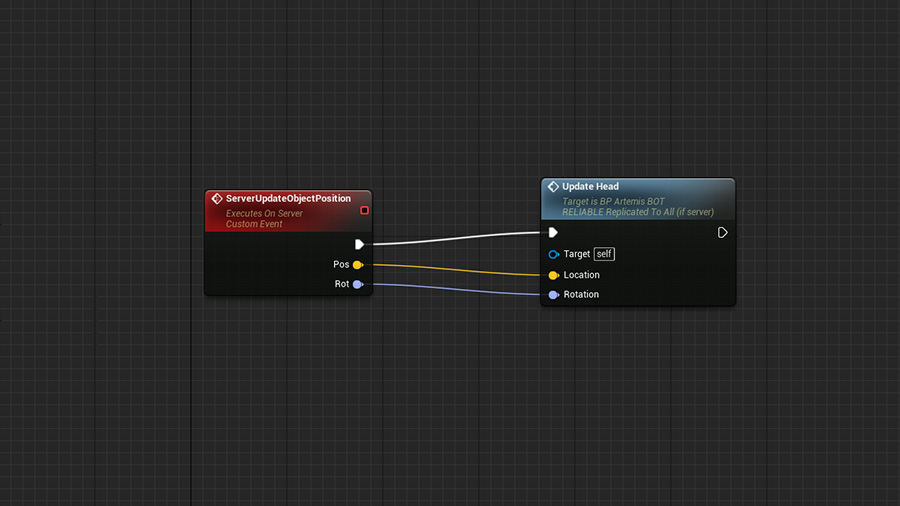

- Multicast everything from the server. The server’s only real job is to relay the data from the previous step by calling the appropriate replicated “Multicast” event on all connected clients. Only the server can call Multicast functions, which is why clients sent the data to the Server first.

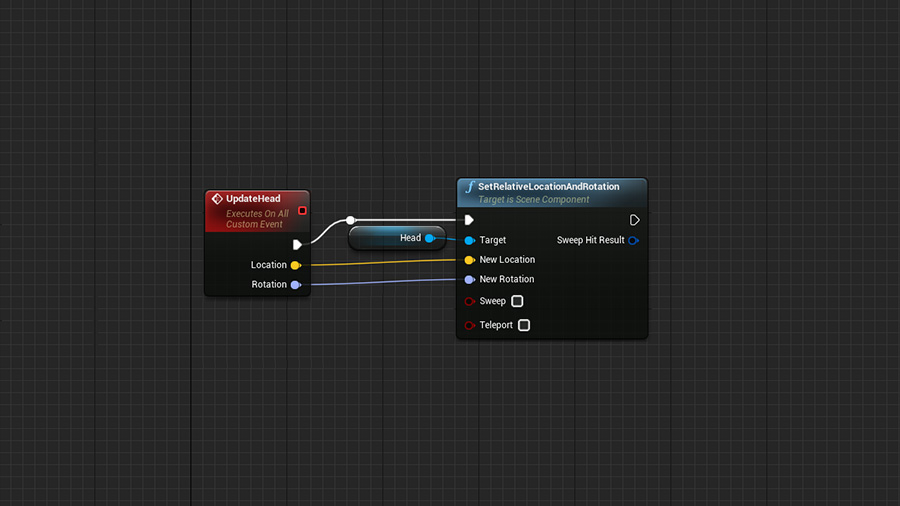

- Finally, because the server is the one that called multicast it sends the data to every client. Thus all clients receive the data and apply the positional and rotational update. Now all respective actors are updated based on their user’s input, or head movement in this case.

Things to Note

- IsLocallyControlled: Lastly you want to insure you’re always using IsLocallyControlled to insure your only sending data from the player’s pawn and not from other remote pawns which exist locally. An instance of a pawn class is replicated to the clients to represent the other person, so code is run for both your local pawn and the ‘ghost’ pawn (who is the other player). By using a IsLocallyControlled check, we can ensure we only send data that is our client’s data, and not the ‘ghost’ pawn data. Failing to do this will turn it into a total mess.

- Our fireflies were server side only with position and rotation replication on the client. This added a ton of extra data to the LAN. In an ideal solution the fireflies would be deterministic and local.

- Take full advantage of PlayerState. All connected client’s PlayerStates are available to the Game Mode (which is server only) this makes it a great places to store Score, or Health, and when the Game Mode detects a winning score or death it can handle it appropriately.

Testing

Now that we have some actors on the server and clients and they are all replicating we need to start testing, and this is where things start to get more difficult.

-

- You cannot test two clients easily on the same machine. HMD’s do not like being used by two applications at once, performance quickly becomes very poor (sub-single frames a second) if at all.

- We used Listen servers to host. This allowed the host to play while also acting as the server, and gave us more control when debugging as well.

- You can expect a lot of “server sees the update but client doesn’t” type issues. This stems from not understanding how UE4’s server-client networking model works, not from any issues with UE4 code. You just need to take the time to wrap your head about replication, and all the ways it can be used.

- VR multiplayer absolutely requires two developers every time you want to test. You ever tried wearing two HMD’s and wielding four motion controllers? No? Me neither.

- Multiplayer games cannot be tested from the editor. If both devs are working from a map (even same saved version) and you try to use PIE you’ll get a map version mismatch as both editor clients generate PIE versions of map.

- The solution is NOT to use “Standalone” as standalone doesn’t work with VR! So you have to use VR Preview, which is back to the PIE mismatch.

- The solution is also NOT using “Launch”. Yes this will cook and launch the content from disk, while also allowing HMD’s to connect. However, launch only packs and cooks the current level open in the editor.

- So the VR solution is: you’ll need to familiarize yourself with “Unreal Frontend” for project generation. This tools allows users to customize how builds of your game are made and deployed.

- The most important piece, the “Project Launcher” can be accessed from the dropdown menu of “Launch” in the editor.

- The project launcher allows you to create “Custom Launch Profiles” where you can define a “By the Book” cooking profile in which you can include the needed maps, among other things.

Shipping Build

[OnlineSubsystem] DefaultPlatformService=Null

Finally it’s time to make a full shipping build of your project and deploy it on a few machines for play-testing. You absolutely must insure that you do not forget to include: [OnlineSubsystem] DefaultPlatformService=Null into in your DefaultEngine.ini file before building. Without this your game will be built without a defined OnlineSubsystem and will always fail network connections.

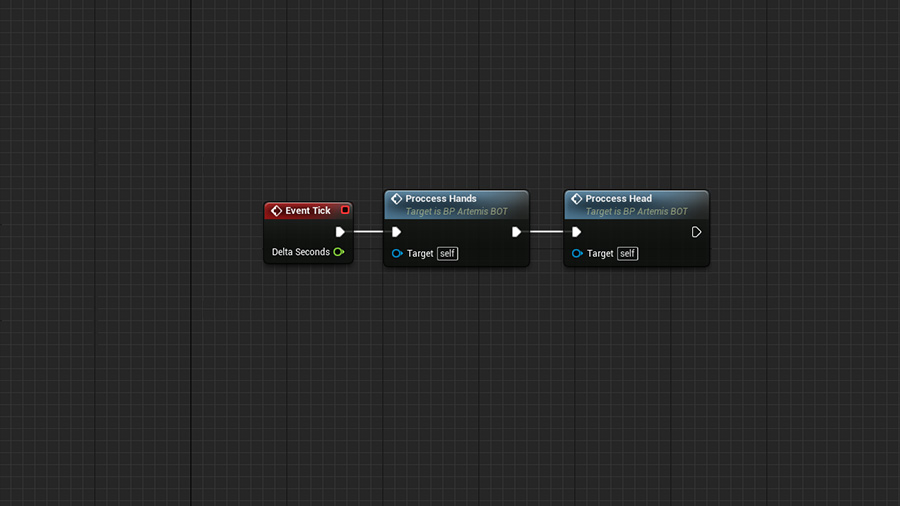

Tick Event on the Player Pawn.

If Locally Controlled we send HMD orientation to the Server.

Server passes data to all clients.

Server passes data to all Player Pawns which now set the Head Orientation.

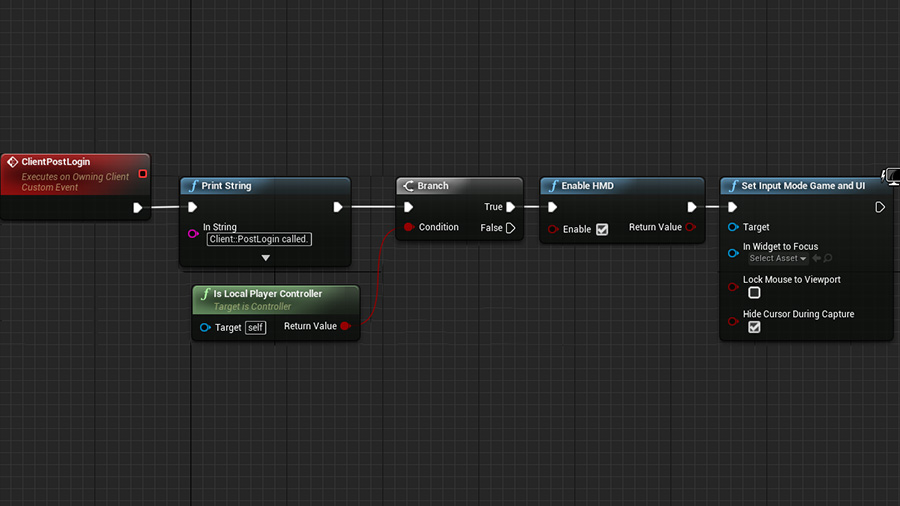

GameMode Client Post Login example. Very powerful!

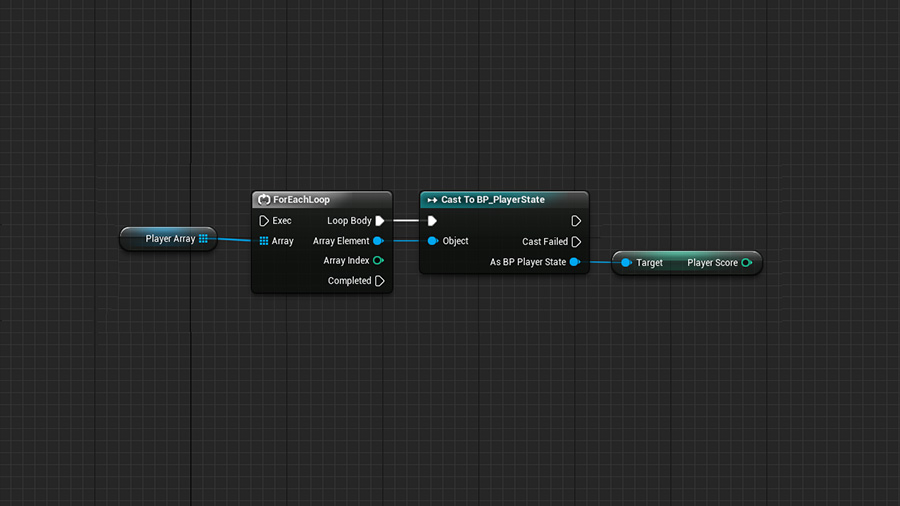

GameState example of how to get all Playerstates for victory condition.

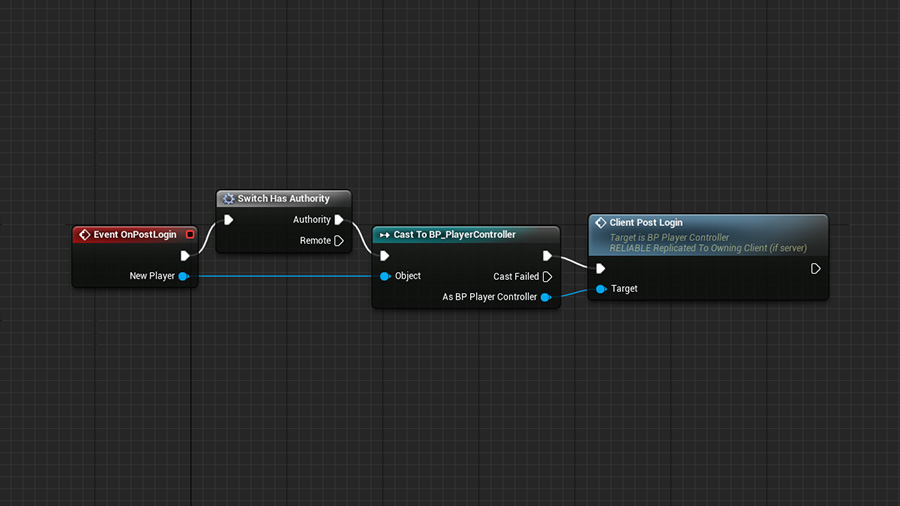

GameMode OnPostLogin example, useful for setting up players right after login!

GameMode OnPostLogin example, useful for setting up players right after login!

Project Summary

In the end the project was a success from the standpoint that we were able to explore the complexities of creating a multi-player virtual reality experience in Unreal Engine 4. We learned how important player to player communication is, even simple interactions like emoji feedback adds so much to the experience. It creates a connection between the two players. Another ‘must’ is player to player physical feedback, we included the ability for players to swat others with their firefly net which caused a silly scrambled emoji face to appear on the struck player. Simple easter eggs like this add a lot to the experience. It makes players feel like they are actually part of the same world. Without it, players can see each other but feel isolated and this quickly ruins the immersion of the experience.

Our biggest take away is the added overhead of testing. Since testing quickly becomes so time consuming to both the devs and QA teams, any project timeline is going to be bloated by this simple fact. And without sufficient testing a project could quickly fall into disarray. Because of this we bit off more than we could chew with a two week project, but we managed to accomplish a lot, and we hope to share this concept piece with future clients in hopes to make something full scale for fans, users, and clients to remember.

Developed by Oliver Barraza & Matt Hoffman